Abstract

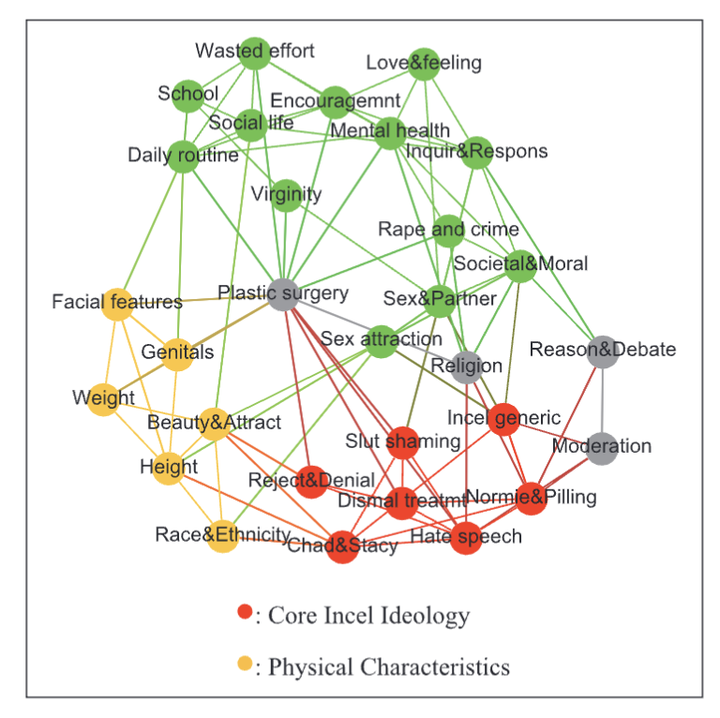

Recent instances of lethal mass violence have been linked to digital communities dedicated to misogynist and sexist ideologies. These forums often begin with discussions of more conventional or mainstream ideas, raising the question about the process through which these communities transform from relatively benign to extremist. This article presents a study of the Reddit incel community, active from mid-2016 to its ban in late 2017, which evolved from a self-help forum to a hub for extremist ideologies. We use computational grounded theory to deduce empirical patterns in forum composition, psychological states reflected in language use, and semantic content before refining and testing an interactional process that explains this change: a shift away from drawing on real-world experiences in discussion toward a greater reliance on cognitively simple symbols of group membership. This shift, in turn, leads to more discussions centered on deviant ideology. The results confirm that understanding the dynamics of conversation—specifically, how ideas are interpreted, reinforced, and amplified in recurrent, person-to-person interactions—is crucial for understanding cultural change in digital communities. Implications for sociology of groups, culture, and interactions in digital spaces are discussed.